Making existing VR hardware more efficient is a big challenge, to say the least. The Oculus team may have come up with a way, though. According to their developer blog, they are introducing a new technique to optimize VR rendering. Rendered pixels will be reprojected from one eye to the other, with the gaps filled in by an additional rendering pass. This will result in saving around 20% on GPU resources. A very interesting development that can do wonders for the VR industry.

Oculus Rift Games may Become More Resource-efficient

It is no secret how expensive VR headsets require a beastly computer to render graphics. This is one of the main reasons why non-gamers are unlikely to pick up an HTC Vive or Oculus Rift anytime soon. Most consumers have mediocre desktop PCs at best, which means they won’t get the best VR experience. Making things more efficient in this department is the next logical step in the evolution. Doing so is much easier said than done.

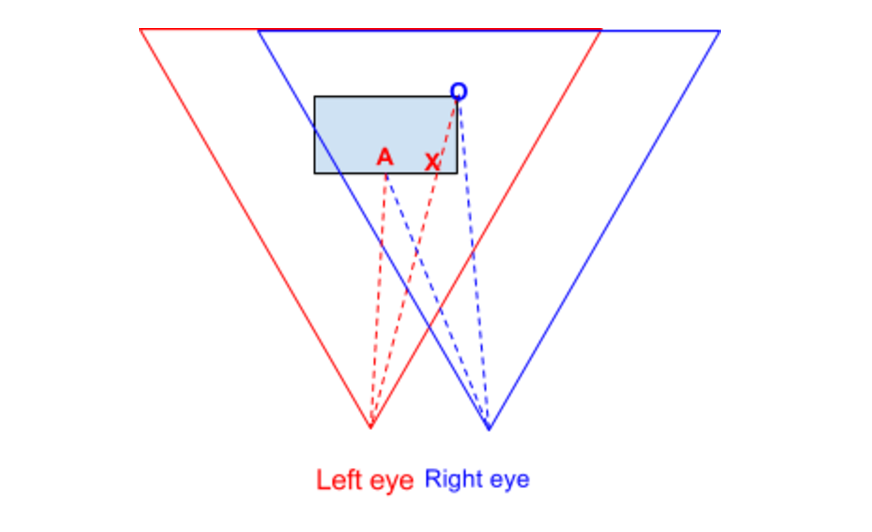

Oculus developers have come up with an intriguing solution, to say the least. Their stereo shading reprojection for the Unity game engine will shake things up for sure. The net result is a 20% GPU cost reduction per frame, which is absolutely incredible. Moreover, this technology can easily be integrated into any Unity project in existence right now. Virtual reality apps currently render every scene twice, which strains powerful resources. Not everyone has those resources at their disposal, resulting in subpar VR experiences.

Sharing pixel rendering work between both eyes will alleviate some of those concerns, though. Stereo Shading Reprojection makes pixel sharing possible, which avoids having to render the same pixels twice. Moreover, it reduces GPU resources as the first eye’s rendering result becomes the second eye’s framebuffer. However, this concept needs to be stereoscopically correct, and have a backup solution for environments which do not work well with reprojection. Moreover, this solution needs to be easy to integrate into existing and in-development projects as well.

Do keep in mind there are some limitations as to what this technology can do. It can only be integrated into unity right now, but that may change soon. Simple shaders will not benefit from this technology all that much either. Additionally, both eye cameras need to be rendered sequentially as well. There is still a lot of exploring to be done as far as this technology is concerned. However, it is good to see the Oculus team come up with solutions which will make VR less resource-intensive and still quite fun to experience.

If you liked this article make sure to follow us on twitter @thevrbase and subscribe to our newsletter to stay up to date with the latest VR trends and news.